[1]:

# NBVAL_IGNORE_OUTPUT

import random

import io

import csv

import numpy as np

import matplotlib.pyplot as plt

from clkhash.field_formats import *

from clkhash.schema import Schema

from clkhash.comparators import NgramComparison, ExactComparison, NumericComparison

from clkhash.clk import generate_clk_from_csv

Explanantion of the different comparison techniques¶

The clkhash library is based on the concept of a CLK. This is a special type of Bloom filter, and a Bloom filter is a probabilistic data structure that allow space-efficient testing of set membership. By first tokenising a record and then inserting those tokens into a CLK, the comparison of CLKs approximates the comparisons of the sets of tokens of the CLKs.

The challenge lies in finding good tokenisation strategies, as they define what is considered similiar and what is not. We call these tokenisation strategies comparison techniques.

With Schema v3, we currently support three different comparison techniques:

ngram comparison

exact comparison

numeric comparison

In this notebook we describe how these techniques can be used and what type of data they are best suited.

n-gram Comparison¶

n-grams are a popular technique for approximate string matching.

An n-gram is a n-tuple of characters which follow one another in a given string. For example, the 2-grams of the string ‘clkhash’ are ‘ c’, ‘cl’, ‘lk’, ‘kh’, ‘ha’, ‘as’, ‘sh’, ‘h ‘. Note the white- space in the first and last token. They serve the purpose to a) indicate the beginning and end of a word, and b) gives every character in the input text a representation in two tokens.

The number of n-grams in common defines a similiarity measure for comparing strings. The strings ‘clkhash’ and ‘clkhush’ have 6 out of 8 2-grams in common, whereas ‘clkhash’ and ‘anonlink’ have none out of 9 in common.

A positional n-gram also encodes the position of the n-gram within the word. The positional 2-grams of ‘clkhash’ are ‘1 c’, ‘2 cl’, ‘3 lk’, ‘4 kh’, ‘5 ha’, ‘6 as’, ‘7 sh’, ‘8 h ‘. Positional n-grams can be useful for comparing words where the position of the characters are important, e.g., postcodes or phone numbers.

n-gram comparison of strings is tolerant to spelling mistakes, as one wrong character will only affect n n-grams. Thus, the larger you choose ‘n’, the more the error propagates.

Exact Comparison¶

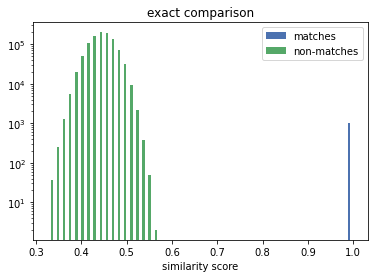

The exact comparison technique creates high similarity scores if inputs are identical, and low otherwise. This can be useful when comparing data like credit card numbers or email addresses. It is a good choice whenever data is either an exact match or has no similarity at all. The main advantage of the Exact Comparison technique is that it better separates the similarity scores of the matches from the non-matches (but cannot acount for errors).

We will show this with the following experiment. First, we create a dataset consisting of random 6-digit numbers. Then we compare the dataset with itself, once encoded with the Exact Comparison, and twice encoded with the Ngram Comparison (uni- and bi-grams) technique.

[2]:

data = [[i, x] for i, x in enumerate(random.sample(range(1000000), k=1000))]

a_csv = io.StringIO()

csv.writer(a_csv).writerows(data)

We define three different schemas, one for each comparison technique.

[3]:

unigram_fields = [

Ignore('rec_id'),

IntegerSpec('random', FieldHashingProperties(comparator=NgramComparison(1, True), strategy=BitsPerFeatureStrategy(300))),

]

unigram_schema = Schema(unigram_fields, 512)

bigram_fields = [

Ignore('rec_id'),

IntegerSpec('random', FieldHashingProperties(comparator=NgramComparison(2, True), strategy=BitsPerFeatureStrategy(300))),

]

bigram_schema = Schema(bigram_fields, 512)

exact_fields = [

Ignore('rec_id'),

IntegerSpec('random', FieldHashingProperties(comparator=ExactComparison(), strategy=BitsPerFeatureStrategy(300))),

]

exact_schema = Schema(exact_fields, 512)

secret_key = 'password1234'

[4]:

from bitarray import bitarray

import base64

import anonlink

def grouped_sim_scores_from_clks(clks_a, clks_b, threshold):

"""returns the pairwise similarity scores for the provided clks, grouped into matches and non-matches"""

results_candidate_pairs = anonlink.candidate_generation.find_candidate_pairs(

[clks_a, clks_b],

anonlink.similarities.dice_coefficient,

threshold

)

matches = []

non_matches = []

sims, ds_is, (rec_id0, rec_id1) = results_candidate_pairs

for sim, rec_i0, rec_i1 in zip(sims, rec_id0, rec_id1):

if rec_i0 == rec_i1:

matches.append(sim)

else:

non_matches.append(sim)

return matches, non_matches

generate the CLKs according to the three different schemas.

[5]:

a_csv.seek(0)

clks_a_unigram = generate_clk_from_csv(a_csv, secret_key, unigram_schema, header=False)

a_csv.seek(0)

clks_a_bigram = generate_clk_from_csv(a_csv, secret_key, bigram_schema, header=False)

a_csv.seek(0)

clks_a_exact = generate_clk_from_csv(a_csv, secret_key, exact_schema, header=False)

generating CLKs: 100%|██████████| 1.00k/1.00k [00:00<00:00, 9.90kclk/s, mean=229, std=5.8]

generating CLKs: 100%|██████████| 1.00k/1.00k [00:00<00:00, 25.5kclk/s, mean=228, std=5.89]

generating CLKs: 100%|██████████| 1.00k/1.00k [00:00<00:00, 24.8kclk/s, mean=227, std=5.73]

We do an exhaustive pairwise comparison for the CLKs and group the similarity scores into ‘matches’ - the similarity scores for the correct linkage - and non-matches.

[6]:

sims_matches_unigram, sims_non_matches_unigram = grouped_sim_scores_from_clks(clks_a_unigram, clks_a_unigram, 0.0)

sims_matches_bigram, sims_non_matches_bigram = grouped_sim_scores_from_clks(clks_a_bigram, clks_a_bigram, 0.0)

sims_matches_exact, sims_non_matches_exact = grouped_sim_scores_from_clks(clks_a_exact, clks_a_exact, 0.0)

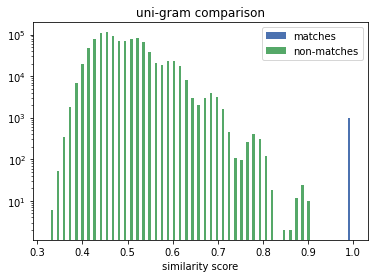

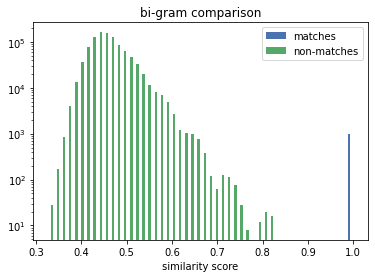

We will plot the similarity scores as histograms. Note the log scale of the y-axis.

[7]:

# NBVAL_IGNORE_OUTPUT

import matplotlib.pyplot as plt

plt.style.use('seaborn-deep')

plt.hist([sims_matches_unigram, sims_non_matches_unigram], bins=50, label=['matches', 'non-matches'])

plt.legend(loc='upper right')

plt.yscale('log')

plt.xlabel('similarity score')

plt.title('uni-gram comparison')

plt.show()

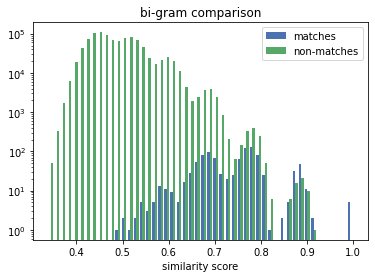

plt.hist([sims_matches_bigram, sims_non_matches_bigram], bins=50, label=['matches', 'non-matches'])

plt.legend(loc='upper right')

plt.yscale('log')

plt.xlabel('similarity score')

plt.title('bi-gram comparison')

plt.show()

plt.hist([sims_matches_exact, sims_non_matches_exact], bins=50, label=['matches', 'non-matches'])

plt.legend(loc='upper right')

plt.yscale('log')

plt.xlabel('similarity score')

plt.title('exact comparison')

plt.show()

The true matches all lie on the vertical line above the 1.0. We can see that the Exact Comparison technique significantly widens the gap between matches and non-matches. Thus increases the range of available solving thresholds (only similarity scores above are considered a potential match) which provide the correct linkage result.

Numeric Comparison¶

This technique enables numerical comparisons of integers and floating point numbers.

Comparing numbers creates an interesting challenge. The comparison of 1000 with 1001 should lead to the same result as the comparison of 1000 and 999. They are both exactly 1 apart. However, string-based techniques like n-gram comparison will produce very different results, as the first pair has three digits in common, compared to none in the last pair.

We have implemented a technique, where the numerical distance between two numbers relates to the similarity of the produced tokens.

We generate a dataset with one column of random 6-digit integers, and a second dataset where we alter the integers of the first dataset by +/- 100.

[8]:

data_A = [[i, random.randrange(1000000)] for i in range(1000)]

data_B = [[i, x + random.randint(-100,100)] for i,x in data_A]

[9]:

a_csv = io.StringIO()

b_csv = io.StringIO()

csv.writer(a_csv).writerows(data_A)

csv.writer(b_csv).writerows(data_B)

We define two linkage schemas, one for postitional uni-gram comparison and one for numeric comparison.

[10]:

unigram_fields = [

Ignore('rec_id'),

IntegerSpec('random',

FieldHashingProperties(comparator=NgramComparison(1, True),

strategy=BitsPerFeatureStrategy(301))),

]

unigram_schema = Schema(unigram_fields, 512)

bigram_fields = [

Ignore('rec_id'),

IntegerSpec('random',

FieldHashingProperties(comparator=NgramComparison(2, True),

strategy=BitsPerFeatureStrategy(301))),

]

bigram_schema = Schema(unigram_fields, 512)

numeric_fields = [

Ignore('rec_id'),

IntegerSpec('random',

FieldHashingProperties(comparator=NumericComparison(threshold_distance=500, resolution=150),

strategy=BitsPerFeatureStrategy(301))),

]

numeric_schema = Schema(numeric_fields, 512)

secret_key = 'password1234'

[11]:

a_csv.seek(0)

clks_a_unigram = generate_clk_from_csv(a_csv, secret_key, unigram_schema, header=False)

b_csv.seek(0)

clks_b_unigram = generate_clk_from_csv(b_csv, secret_key, unigram_schema, header=False)

a_csv.seek(0)

clks_a_bigram = generate_clk_from_csv(a_csv, secret_key, bigram_schema, header=False)

b_csv.seek(0)

clks_b_bigram = generate_clk_from_csv(b_csv, secret_key, bigram_schema, header=False)

a_csv.seek(0)

clks_a_numeric = generate_clk_from_csv(a_csv, secret_key, numeric_schema, header=False)

b_csv.seek(0)

clks_b_numeric = generate_clk_from_csv(b_csv, secret_key, numeric_schema, header=False)

generating CLKs: 100%|██████████| 1.00k/1.00k [00:00<00:00, 17.4kclk/s, mean=229, std=5.98]

generating CLKs: 100%|██████████| 1.00k/1.00k [00:00<00:00, 18.7kclk/s, mean=229, std=5.85]

generating CLKs: 100%|██████████| 1.00k/1.00k [00:00<00:00, 16.5kclk/s, mean=229, std=5.98]

generating CLKs: 100%|██████████| 1.00k/1.00k [00:00<00:00, 18.1kclk/s, mean=229, std=5.85]

generating CLKs: 100%|██████████| 1.00k/1.00k [00:00<00:00, 3.26kclk/s, mean=228, std=5.86]

generating CLKs: 100%|██████████| 1.00k/1.00k [00:00<00:00, 3.23kclk/s, mean=228, std=5.75]

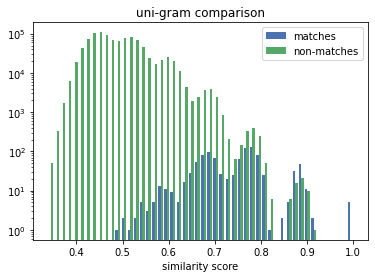

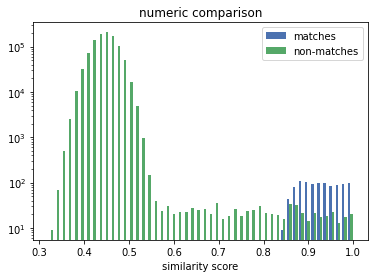

First, we will look at the similarity score distributions. We will group the similiarity scores into matches - the similarity scores for the correct linkage - and non-matches.

[12]:

sims_matches_unigram, sims_non_matches_unigram = grouped_sim_scores_from_clks(clks_a_unigram, clks_b_unigram, 0.0)

sims_matches_bigram, sims_non_matches_bigram = grouped_sim_scores_from_clks(clks_a_bigram, clks_b_bigram, 0.0)

sims_matches_numeric, sims_non_matches_numeric = grouped_sim_scores_from_clks(clks_a_numeric, clks_b_numeric, 0.0)

[13]:

# NBVAL_IGNORE_OUTPUT

plt.style.use('seaborn-deep')

plt.hist([sims_matches_unigram, sims_non_matches_unigram], bins=50, label=['matches', 'non-matches'])

plt.legend(loc='upper right')

plt.yscale('log')

plt.xlabel('similarity score')

plt.title('uni-gram comparison')

plt.show()

plt.hist([sims_matches_bigram, sims_non_matches_bigram], bins=50, label=['matches', 'non-matches'])

plt.legend(loc='upper right')

plt.yscale('log')

plt.xlabel('similarity score')

plt.title('bi-gram comparison')

plt.show()

plt.hist([sims_matches_numeric, sims_non_matches_numeric], bins=50, label=['matches', 'non-matches'])

plt.legend(loc='upper right')

plt.yscale('log')

plt.xlabel('similarity score')

plt.title('numeric comparison')

plt.show()

The distribution for the numeric comparison is very different to the uni/bi-gram one. The similarity scores of the matches (the correct linkage) in the n-gram case are mixed-in with the scores of the non-matches, making it challenging for a solver to decide if a similarity score denotes a match or a non-match.

The numeric comparison produces similarity scores for matches that mirrors the distribution of the numeric distances. More importanty, there is a good separation between the scores for the matches and the ones for the non-matches. The former are all above 0.8, whereas the latter are almost all (note the log scale) below 0.6.

In the next step, we will see how well the solver can find a linkage solution for the different CLKs.

[14]:

def mapping_from_clks(clks_a, clks_b, threshold):

"""computes a mapping between clks_a and clks_b using the anonlink library"""

results_candidate_pairs = anonlink.candidate_generation.find_candidate_pairs(

[clks_a, clks_b],

anonlink.similarities.dice_coefficient,

threshold

)

solution = anonlink.solving.greedy_solve(results_candidate_pairs)

return set( (a,b) for ((_, a),(_, b)) in solution)

true_matches = set((i,i) for i in range(1000))

def describe_matching_quality(found_matches):

"""computes and prints precision and recall of the found_matches"""

tp = len(true_matches & found_matches)

fp = len(found_matches - true_matches)

fn = len(true_matches - found_matches)

precision = tp / (tp + fp)

recall = tp / (tp + fn)

print('Precision: {:.3f}, Recall: {:.3f}'.format(precision, recall))

[15]:

print('results for numeric comparisons')

print('threshold 0.6:')

describe_matching_quality(mapping_from_clks(clks_a_numeric, clks_b_numeric, 0.6))

print('threshold 0.7:')

describe_matching_quality(mapping_from_clks(clks_a_numeric, clks_b_numeric, 0.7))

print('threshold 0.8:')

describe_matching_quality(mapping_from_clks(clks_a_numeric, clks_b_numeric, 0.8))

results for numeric comparisons

threshold 0.6:

Precision: 0.907, Recall: 0.905

threshold 0.7:

Precision: 0.907, Recall: 0.905

threshold 0.8:

Precision: 0.915, Recall: 0.905

[16]:

print('results for unigram comparisons')

print('threshold 0.6:')

describe_matching_quality(mapping_from_clks(clks_a_unigram, clks_b_unigram, 0.6))

print('threshold 0.7:')

describe_matching_quality(mapping_from_clks(clks_a_unigram, clks_b_unigram, 0.7))

print('threshold 0.8:')

describe_matching_quality(mapping_from_clks(clks_a_unigram, clks_b_unigram, 0.8))

results for unigram comparisons

threshold 0.6:

Precision: 0.343, Recall: 0.336

threshold 0.7:

Precision: 0.396, Recall: 0.327

threshold 0.8:

Precision: 0.558, Recall: 0.130

As expected, we can see that the solver does a lot better when given the CLKs generated with the numeric comparison technique.

The other thing that stands out is that the results in with the numeric comparison are stable over a wider range of thresholds, in contrast to the unigram comparison, where different thresholds produce different results, thus making it more challenging to find a good threshold.

Conclusions¶

The overall quality of the linkage result is heavily influence by the right choice of comparison technique for each individual feature. In summary: - n-gram comparison is best suited for fuzzy string matching. It can account for localised errors like spelling mistakes. - exact comparison produces high similiarity only for exact matches, low otherwise. This can be useful if the data is noise-free and partial similarities are not relevant. For instance credit card numbers, even if they only differ in one digit they discribe different accounts and are thus just as different then numbers which don’t have any digits in common. - numeric comparison provides a measure of similiarity that relates to the numerical distance of two numbers. Example use-cases are measurements like height or weight, continuous variables like salary.

[ ]: